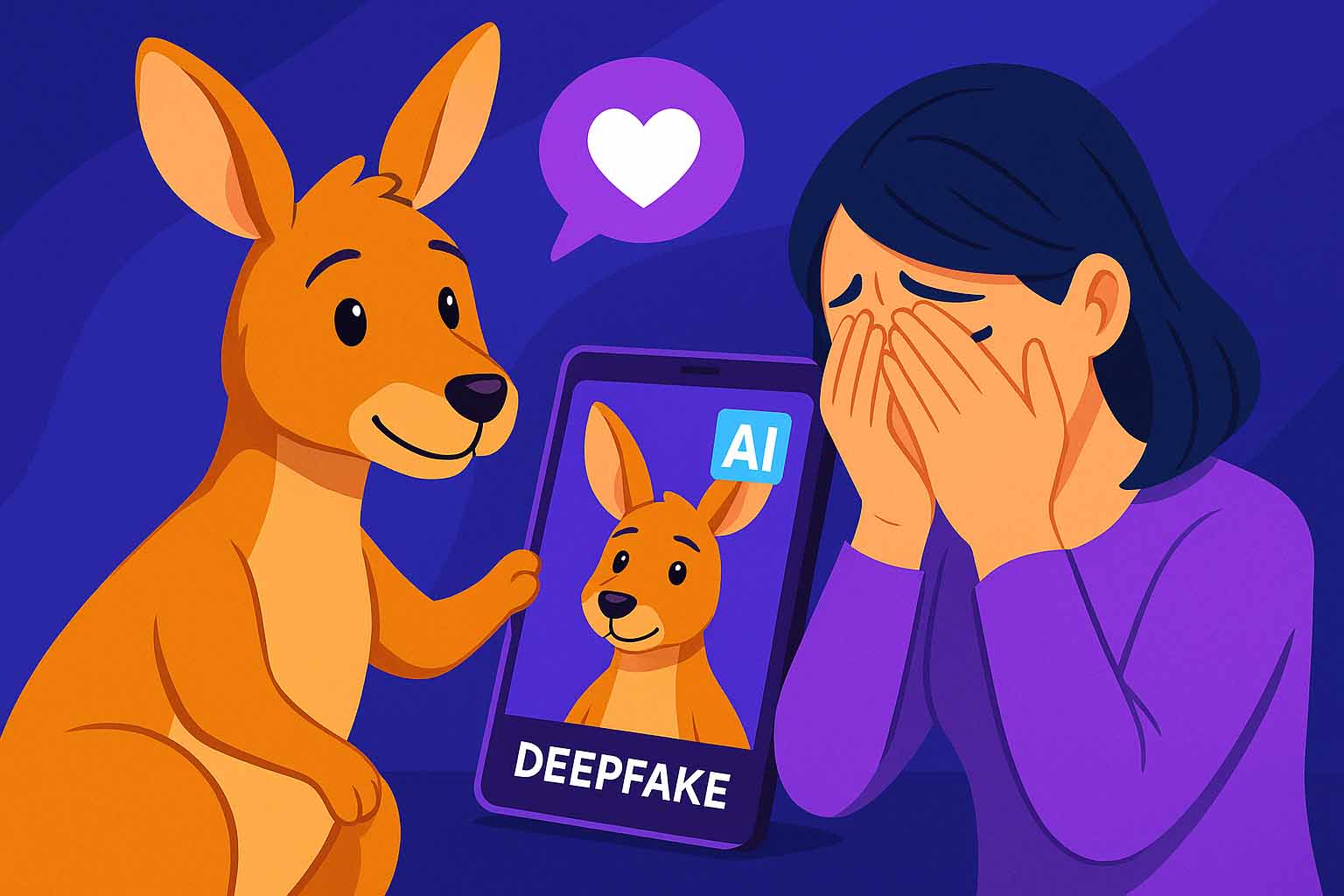

When an Emotional Support Kangaroo Almost Made It on a Plane: The AI Deepfake You Didn’t See Coming

Imagine millions around the world rooting for a little kangaroo, complete with a boarding pass and a tiny carry-on bag, struggling to get on a plane. It seems heartwarming and utterly believable—until you realize none of it actually happened. This surprisingly real but entirely AI-generated video has been blowing up online, crafted by the Instagram account Infinite Unreality, specializers in daily doses of digital unreality. Watch it here.

How an AI-Generated Kangaroo Pulled at Our Heartstrings

What makes this viral moment so fascinating—and frightening—is how effortlessly AI tapped into what makes us emotional. The tiny details of the kangaroo’s journey, the apparent emotions flashing across the airline staff’s faces, and the tiny wedding ring materializing mid-scene all contribute to this illusion of realism.

Yet, look closer and the cracks start to show:

– The boarding pass is pure gibberish

– The gate agent’s name tag is eerily blank

– The passenger’s wedding ring appears inconsistently

These subtle glitches remind us that, for now, AI still struggles with minute text and consistent objects but totally nails evoking empathy and engagement.

Why This Viral AI Creation Matters More Than Just the Cuteness Factor

This isn’t just another cute meme about a kangaroo. It’s a wake-up call. If AI can make us emotionally invest in a fictional animal at an airport, what else might it convince us of? We’re stepping into a wild new era where our eyes—and hearts—can’t always distinguish real from fake. The ethical implications are vast:

– Misinformation Risks: Such convincing AI creations can easily be weaponized to spread falsehoods.

– Emotional Manipulation: AI-generated content can be designed to trigger specific feelings to influence behavior.

– Trust Erosion: If we can’t trust what we see, it shakes the foundation of news, entertainment, and social interactions.

Understanding and detecting these AI signs is becoming an essential skill.

How to Spot AI-Generated Deepfakes Like the Emotional Support Kangaroo Video

Even as AI visuals become more seamless, some tell-tale signs persist:

– Unreadable or nonsensical text on documents or badges

– Inconsistent lighting or shadows

– Unnatural object behavior (like a wedding ring appearing mid-film)

– Strange glitches or visual artifacts on human features

Training our eyes to recognize these nuances will help us navigate the increasingly blurred line between reality and fabrication.

—

For those intrigued by how technology reshapes reality and the ethics behind AI’s growing powers, See more AI news and ethics topics.

—

Final Thoughts

The emotional support kangaroo video is a peek into the future—a future where AI not only mimics reality but also taps deeply into our emotions and perceptions. Staying curious and critical is our best defense. Keep exploring, stay informed, and let’s navigate the new digital landscape together.

—

Video source: Instagram account Infinite Unreality via YouTube

—

FAQ

Q1: What is this video about?

It explores a deeply engaging yet entirely AI-generated video featuring an emotional support kangaroo trying to board a plane, highlighting the growing sophistication of AI deepfakes.

Q2: Why is this important?

Because it reveals how AI can manipulate emotions and perceptions, raising critical concerns about misinformation, emotional manipulation, and trust in digital content.

Q3: How can I spot AI-generated videos?

Look for inconsistencies like illegible text, mismatched objects, unnatural movements, or inexplicable changes such as disappearing or reappearing details.

📢 Want more insights like this? Explore more trending topics.